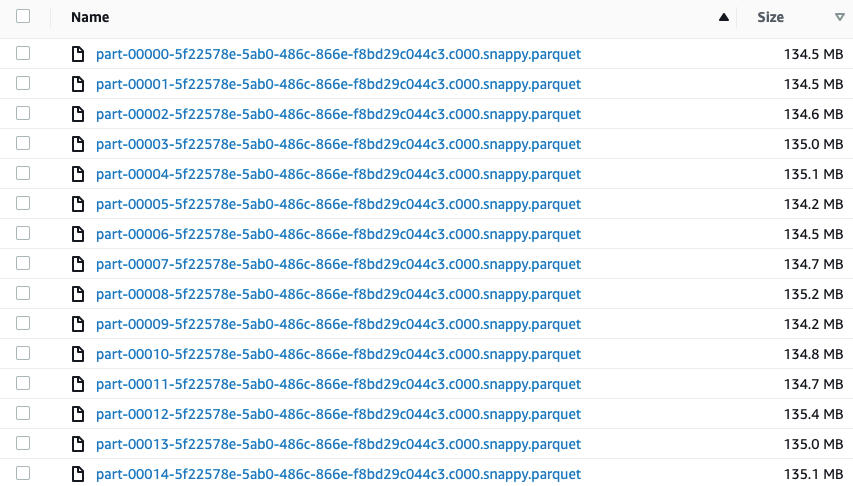

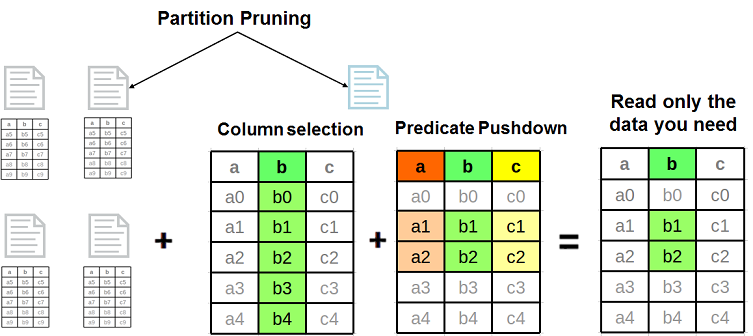

Re: Partition Redispatch S3 parquet dataset using column - how to run optimally? - Dataiku Community

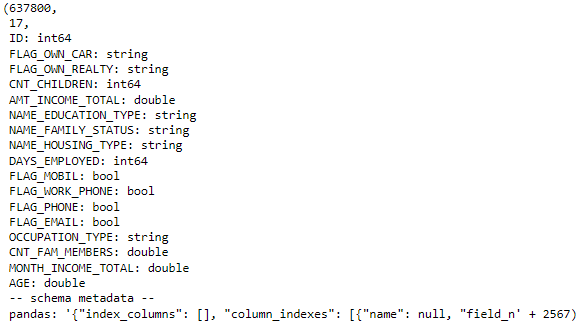

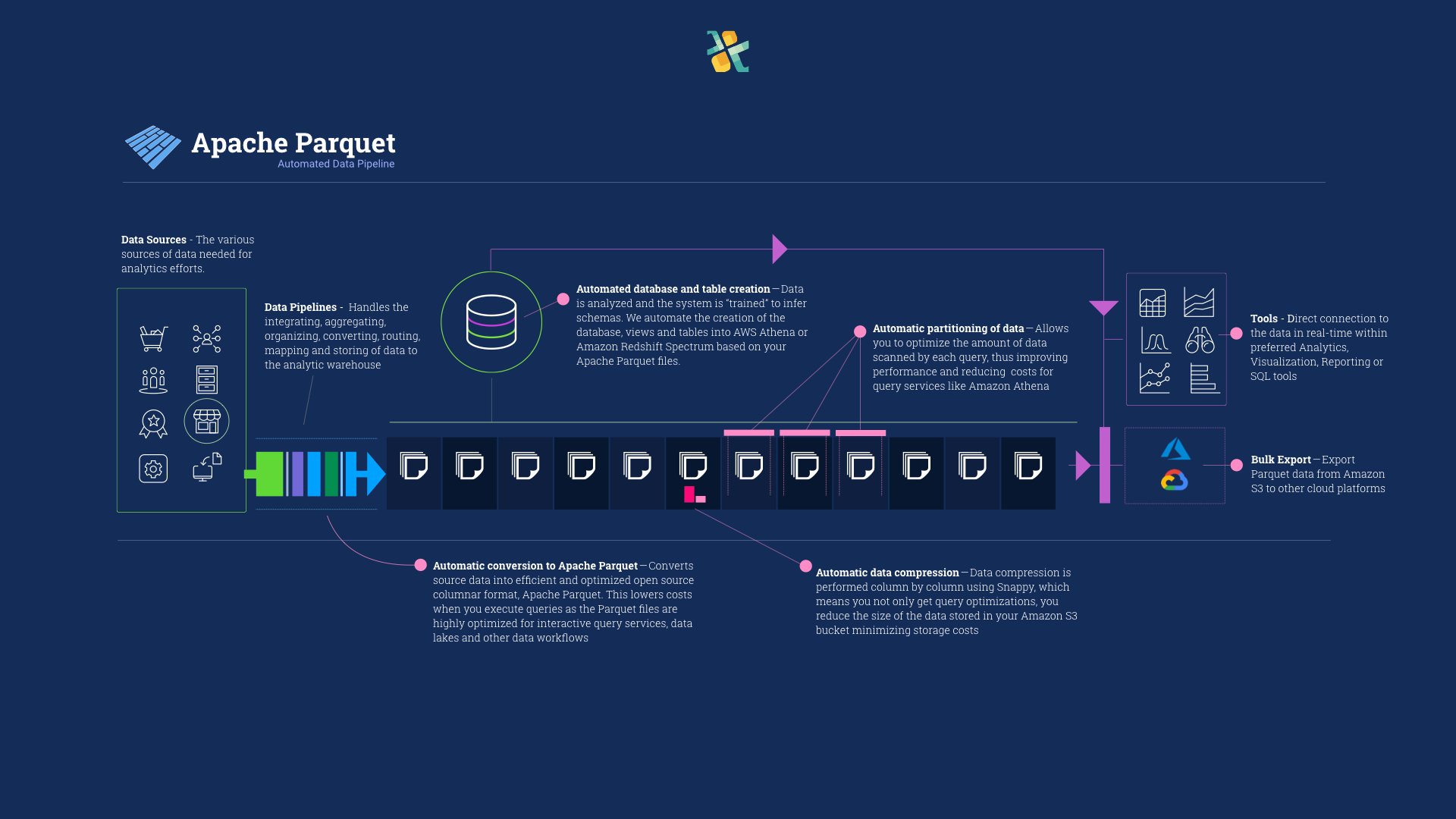

3 Quick And Easy Steps To Automate Apache Parquet File Creation For Google Cloud, Amazon, and Microsoft Azure Data Lakes | by Thomas Spicer | Openbridge